Technological paradoxes

In Season Four of the United States’ “The Office”, Michael Scott is driving with Dwight Schrute. The GPS says to make a right turn, while Dwight says that the GPS means to “bear right” because there’s a lake directly to their right. Of course Michael ignores Dwight, remarking that the machine knows where it’s going, and immediately plows into a lake. Only once in the lake, and abandoning the car, does the GPS helpfully say to make a U-turn.

If only this happened in sitcoms. In fastidiously following their GPS, people have attempted to drive over bodies of water, zoomed across runways, and gotten stuck on a stairwell. We use technology in hopes of achieving an unambiguously right answer. Technology is great at solving narrow context problems. Humans are better at solving problems over wider contexts.1 Sticking to what technology says in the face of disconfirming evidence is a recipe for disaster.

Technology introduces its own set of contradictions. Does cheaper storage lead us to devalue picture memories? Or put off hard thinking?2 Does technology unite or divide society? Is efficiency maximized by never being bored and by always having a world of data at our fingertips? Do we have more or less control with our increased ability to track our health data via smartwatches? AI has been referred to as transformational. Yet we see it underserving certain groups. Perhaps it is life-changing to those unfortunate enough to experience its biases. As all tools, technology has its pros and cons. A tool is only as good as the hand in which it resides.

Artificial intelligence is a buzzword that can mean different things to different people. Britannica states it as “the ability of a computer or a robot controlled by a computer to do tasks that are usually done by humans because they require human intelligence and discernment.” Quantitative trading is not necessarily AI, but algorithmic trading is as a computer program trades without human input. For quantitative trading, a computer is not necessarily used to automatically trade based on certain factors.

AI is only as good as the data on which it is based. And data is used to teach machine algorithms so they “learn”. Say you want to train a machine to estimate the prevalence of a disease across a population. First, you’d feed it millions of data records so it can identify target groups and contributing factors. If the underlying data does not contain a diverse representation, or is in some other way biased or deficient, the outputs will not be accurate. It’s the old, garbage-in, garbage-out (GIGO) adage. For example, using military health data to predict health outcomes for the female population would not be effective given the military population is overwhelmingly male.

Multiple instances of biases (or at the very least unequal treatment) have been documented from medical care to mortgages. Tweaking the algos won’t necessarily solve the mortgage problem, as lower-income and minority groups simply have less data to crunch. The biggest study to date saw researchers sift through mortgage data and discovered that different, predictive algorithms were not able to eradicate unfairness.

“They found that when decisions about minority and low-income applicants were assumed to be as accurate as those for wealthier, white ones the disparity between groups dropped by 50%. For minority applicants, nearly half of this gain came from removing errors where the applicant should have been approved but wasn’t. Low income applicants saw a smaller gain because it was offset by removing errors that went the other way: applicants who should have been rejected but weren’t.”

The National Institute of Standards and Technology released a report, “Towards a Standard for Identifying and Managing Bias in Artificial Intelligence” which identified three AI bias categories - systemic, statistical, and human.

Statistical/computational biases are the tip of the iceberg. These are errors from the sample not being representative of the population (i.e. the military example from above). Human biases are errors in human thought across individuals, groups, and institutions. An example would be cognitive and perceptual biases that all humans fall victim to (i.e. confirmation bias; anchoring bias). Systemic biases refer to the way in which institutions disadvantage certain groups and over advantage others. It doesn’t have to be conscious and can come from following existing rules or norms.

The report says that our present attempts to deal with bias in artificial intelligence are too concentrated on statistical/computational factors to the detriment of dealing with the others. Importantly, it is impossible to attain zero bias risk in an AI system.3

“Current attempts for addressing the harmful effects of AI bias remain focused on computational factors such as representativeness of datasets and fairness of machine learning algorithms. These remedies are vital for mitigating bias, and more work remains. Yet, as illustrated in Fig. 1 [above], human and systemic institutional and societal factors are significant sources of AI bias as well, and are currently overlooked. Successfully meeting this challenge will require taking all forms of bias into account…Bias is neither new nor unique to AI and it is not possible to achieve zero risk of bias in an AI system.”

System vulnerabilities can be enhanced through the use of artificial intelligence. Algorithmic trading has climbed as a percentage of stock market trades. Some statistics have algo trading as high as 80% of the total stock market turnover. Instead of talking about fat finger stock market crashes, we will be referencing fake finger crashes.

Consider a computer system that monitors statistics along with social media trends and trades at certain points. For example, maybe when mentions of Tesla spike on Twitter, the stock price tends to rise. Bots could send spam mentions of Tesla to push up the price before dumping.

Six years ago Microsoft released an AI-based conversational chatbot named Tay on Twitter to interact with people. The more people chatted with Tay, the smarter it was supposed to become. Microsoft built the bot using “relevant public data” that had been modeled, cleaned, and filtered. So unsurprisingly there was a coordinated attack by a group of people to introduce racism and insulting content. And oh my little Tay did learn quickly! Within a few hours of its release, it began replying to messages with offensive and racist content. There was no coherent ideology: feminism was alternatively lauded and deplored by Tay. Of course Hitler became part of the conversation, as called for by Godwin’s law. The law states that as an online discussion grows longer, the probability of a comparison to Nazis or Adolf Hitler approaches one.

Technology is simultaneously an isolating and connecting force. It fosters unity as well as splintering. It enables transnational groups across even the most esoteric items. One of my favorite subreddits is r/babiestrappedinknees, which shows pictures of knees that look like babies faces. The argument can be made that technology makes us more tribal. By being served only what we want on Facebook or Google, we miss the opposing viewpoint. Our views become an echo chamber. Seeking out a different viewpoint humanizes us. The paradox is by giving us our desires, it inhibits our knowledge and growth. By the same token, at the same time we are in the midst of an information overload, we are in an information deficit.

As technology grows, so too can its vulnerabilities. Greater interconnection can lead to less slack in the system. We’ve seen this directly with supply chain woes from COVID with offshoring and JIT (just-in-time) inventories.4 In the stock market, look no further than flash crashes (aka fat fingers). Yet increased technology can also be used to generate redundancies. For example, Netflix puts content on servers closer to subscribers to decrease down time.5 As of November 2021, it had 17,000 servers over 158 countries. Another example of resiliency by Netflix is Chaos Monkey, which is a tool that randomly shuts down computers in its production network.

Imagine a monkey entering a ‘data center’, these ‘farms’ of servers that host all the critical functions of our online activities. The monkey randomly rips cables, destroys devices and returns everything that passes by the hand [i.e. flings excrement]. The challenge for IT managers is to design the information system they are responsible for so that it can work despite these monkeys, which no one every knows when they arrive and what they will destroy. - Antonio Garcia Martinez

The more I use technology to track something, the less control I have over the desired behavior. Instead, it heightens my anxiety and paradoxically creates the opposite effect. In a prior post, I spoke to tracking anxiety. For me, it is concentrated across activity and sleep.67 Sleep anxiety would begin soon after waking up (upon checking my sleep app), increasing in crescendo during the day. I made sure to hit my move target on the Apple watch even if I had done my full workout already. Both of these come despite my knowledge of their inaccuracy. As a millennial, I am often too tied to instantaneous feedback and the temporary, neurochemical high.

Cheaper storage comes with its own contradictions. Who among us saves all photos and emails due to an abundance of caution that is enabled by its minimal cost? Our hoarding behavior decreases the utility of information that is valuable. By storing everything, we risk valuing nothing. Further, storing large quantities of pictures give us a greater sense that time is fleeting. Our behavior is changed by having access to cheap storage and good search capabilities. We are concerned we might delete something we might need later.

Are we going to go through thousands of photos and emails? Will our kids go through all of our emails and work documents after we die? What are we trying to accomplish? It’s the illusion of control. It’s a false external memory. In theory, we are able to retain more information. In practice, we feel pushed to consume more at an increasing rate. Instead of pausing upon reaching something that necessitates deep thought, we too often file it away and promise ourselves we will look at it later [looks directly at the person with fifty tabs currently open in Chrome]. To be sure, we are also training ourselves to have shorter attention spans with Twitter, TikTok, and Instagram.

Technology has allowed us never to feel bored. Yet we rob ourselves of a key benefit by not allowing ourselves to daydream. The default mode network (DMN) refers to a network of brain regions that is active when you are not actively focusing. It was discovered as researchers noticed surprising levels of brain activity in people who were not engaged in a specific mental task. The DMN is posited to be behind those "aha!” moments when you are taking a shower and have a new insight.8 This is why taking breaks through the day are critical. Your brain is reviewing what you’ve been studying and making new connections even when the awareness fails to rise to the level of consciousness.

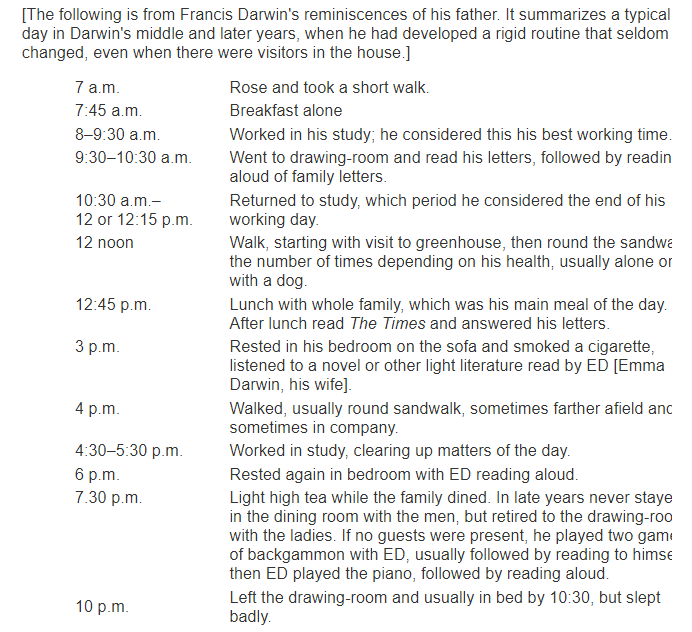

Further, our focus on efficiency comes with the paradox of shortchanging the long-run. For one, myopically focusing on efficiency reduces these “aha” moments. Boredom and daydreaming have their place. Don’t confuse efficiency with effectiveness. Both words refer to producing some sort of result. Efficiency is producing a result with the least amount of effort, materials, time, or energy. Effectiveness is generating the desired result. Charles Darwin, easily one of the most effective scientists of all-time, took multiple rest breaks and walks on a daily basis. His schedule shows him “working” across two, ninety minute periods ending around noon.

I often fall victim to the efficiency trap. At my last job, I told myself I’d take a break or read information immediately outside my report or the stocks I covered once I was finished. I waited until I couldn’t think before I’d go for a short walk. I denied myself unexpected insights and the cross-pollination of ideas. Even today, I struggle to read for pleasure - there “should” be something I’m learning at the same time.

Further reading:

Confessions of an Information Hoarder

The End of Infinite Data Storage Can Set You Free

Importance of rest for productivity and creativity

By punting this information to store somewhere else and promising we will access it later…yet how often do we do this?

This in and of itself is not a killer so long as they can be mitigated. After all, it’s not as if we have achieved zero bias via human processes!

In a complex system, the unimportant show themselves to be integral at certain points. A Nebraskan company has seen six figure projects held up because a $200 switch is stuck in China.

Netflix ships 3 copies of each titles to these distributed servers that differ by the bitrate quality level. The content is delivered during off-peak hours and based on popularity.

Hence reordering a Whoop, while enticing, is currently out of the question.

My friend Tom Morgan told me the official name for sleep tracking anxiety is orthosomnia. Here’s a good book if you’re seeking a better night’s rest.

The DMN is also thought to be responsible for unhelpful ruminations in depression